Engine covered in glass beads. The MIG-25 Project by Ralph Ziman (Photo by Mauricio Hoyos. All images shared with permission.)

Table of Contents

#1 Use Case for AI in 2025 -- Please help me.

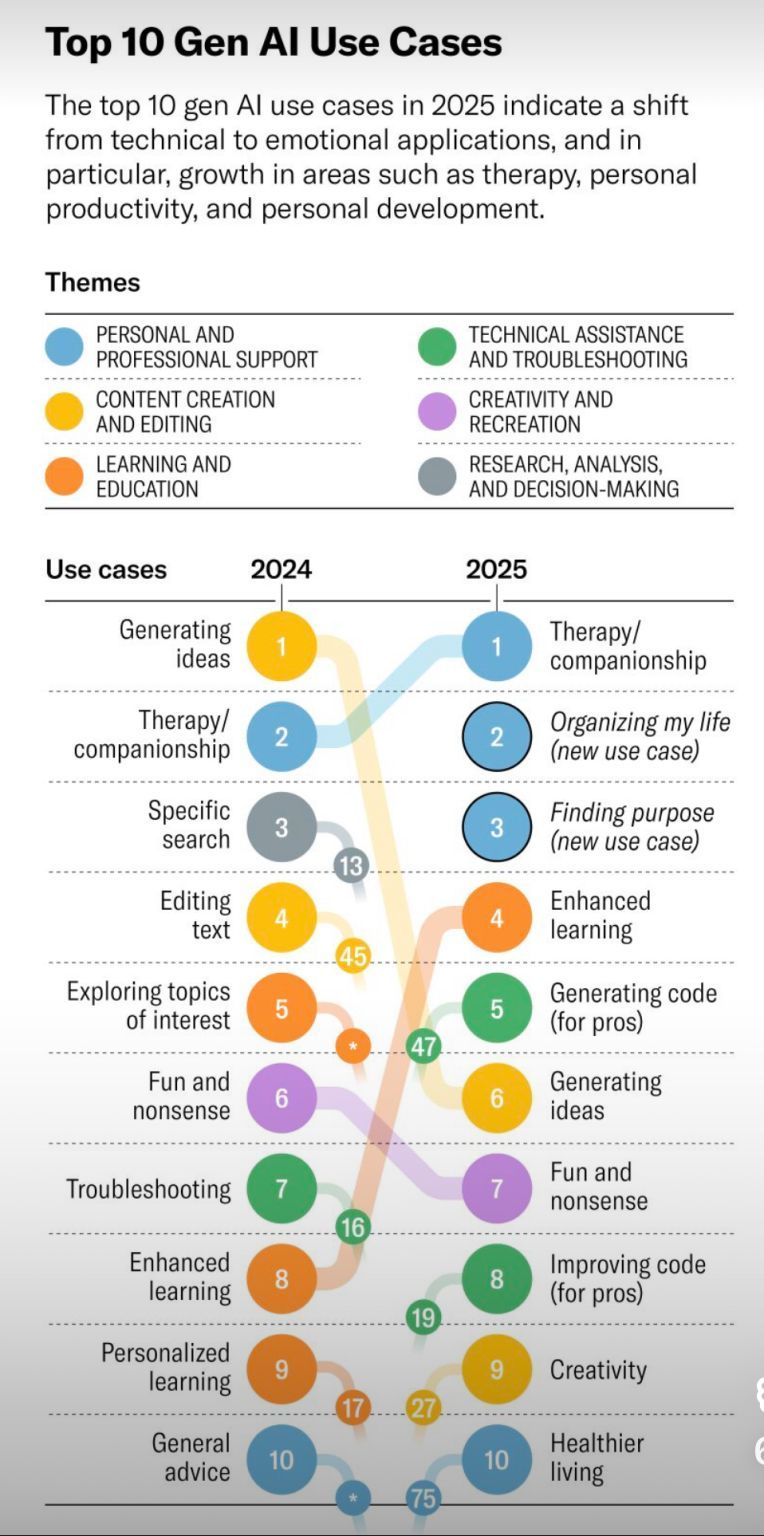

What is it that people use AI for? According to a Harvard Business Review study of AI uses cases in 2025, based on sentiment analysis on Reddit, the number one use case for AI is therapy/companionship. In other words, people are turning to AI for the kind of things they want but perhaps don’t get from other people. It’s not expertise or information that people are searching for; it’s connection; it’s advice.

It’s probably what they’ve been trained to look for on platforms like TikTok and Instagram and even pre-dates the Internet with the popularity early on of bulletin boards on CompuServe and AOL. The second use case was “organizing my life” and the third was “finding purpose.” The fourth is “enhanced learning.” It’s what people once looked for in churches, social clubs, country clubs, service clubs, youth clubs, etc.

My take on the HBR study is that people are looking for, and maybe desperately seeking, help. All kinds of help. They are looking for advice and guidance on what to do with their lives. It’s not just information they want; it’s not an answer they seek. It’s more like a conversation with someone they trust, which is about making a connection.

A few weeks ago, I was in New York City and I went to a Broadway musical called “Maybe Happy Ending.” The musical is about two humanoid robots, so called helper-bots, living in a retirement village in Seoul, Korea. While the basic story is that the two robots fall in love and sing songs about it, a part of the back story for each robot is that the humans they worked for had basically fallen in love with them — and that’s why they were retired. In one case, it was the husband who was falling for the always-friendly robot and the other was a man who loved the company of his robot so much that it made his son jealous.

Humans are needy. The biggest problem we have to solve for is ourselves. I don’t think it’s a matter of intelligent the technology is or how smart we are. We want to understand ourselves and be understood.

Beading a MIG-21 Jet

Kate Mothes wrote this story in Colossal about the work of Ralph Ziman whose MIG-21 project aimed to cover a military jet with millions of glass beads. It took over five years and involved artisans from Zimbabwe and South African working along with Ziman, who has his studio in Los Angeles. “The idea was to take these weapons of war and to repurpose them,” Ziman said.

The MIG-25 Project (Photo by Mauricio Hoyos. All images courtesy of Ralph Ziman, shared with permission.)

Even the cockpit is detailed.

The MIG-25 Project (Photo by Mauricio Hoyos. All images courtesy of Ralph Ziman, shared with permission.)

During this time, the Russians produced a fighter jet called the Mikoyan-Gurevich MiG-21. The plane is “the most-produced supersonic fighter aircraft of all time,” Ziman says. “The Russians built 12,500 MiG-21s, and they’re still in use today... But it’s one thing to say, hey, I want to bead a MiG, and then the next thing, you’ve got a 48-foot MiG sitting in your studio.”

The MIG-25 Project (Photo by Mauricio Hoyos. All images courtesy of Ralph Ziman, shared with permission.)

It’s quite a stunning project.

Augmented Carpentry

Here’s the Augmented Carpentry project from the Laboratory of Timber Constructions (IBOIS) at the École Polytechnique Fédérale de Lausanne (EPFL). Here is a link to the GitHub for the project.

The major XR hardware components for AC are:

A : the computing unit

B : the woodworking electric powertool

C : a tool mount

D : a digitized toolhead

E : an interface

F : a monocular camera

*: Additionally you are required to print stickers to tag the beams.

Here’s the summary of the paper:

An ordinary electric drill was integrated into a context-aware augmented reality (AR) framework to assist in timber-drilling tasks. This study is a preliminary evaluation to detail technical challenges, potential bottlenecks, and accuracy of the proposed object- and tool-aware AR-assisted fabrication systems. In the designed workflow, computer vision tools and sensors are used to implement an inside-out tracking technique for retrofitted drills based on a reverse engineering approach. The approach allows workers to perform complex drilling angle operations according to computer-processed feedback instead of drawing, marking, or jigs. First, the developed methodology was presented, and its various devices and functioning phases were detailed. Second, this first proof of concept was evaluated by experimentally scanning produced drillings and comparing the discrepancies with their respective three-dimensional execution models. This study outlined improvements in the proposed tool-aware fabrication process and clarified the potential role of augmented carpentry in the digital fabrication landscape.

Make Things is a weekly newsletter for the Maker community from Make:. This newsletter lives on the web at makethings.make.co

I’d love to hear from you if you have ideas, projects or news items about the maker community. Email me - [email protected].